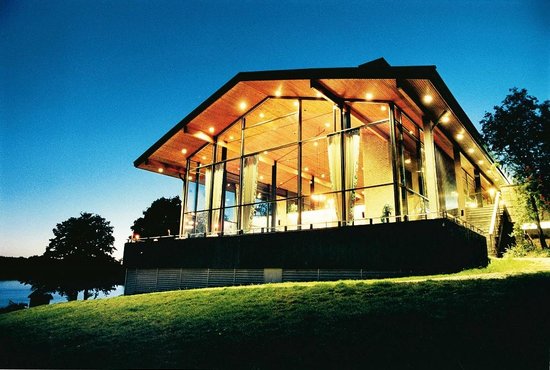

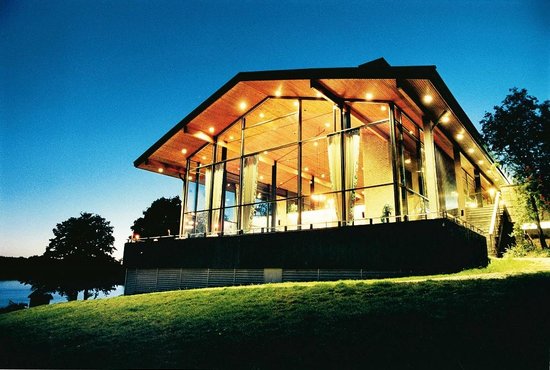

The 13th Cloud Control Workshop, held at Skåvsjöholm in the Stockholm archipelago, is a high-profile workshop with participation by invitation only, targeting academic and industrial technology researchers and leaders from all around the world.

As always, the aim of the 13th Cloud Control Workshop is to foster multidisciplinary research in cloud management, leveraging expertise in areas such as autonomic computing, control theory, distributed systems, energy management, machine learning, mathematical statistics, performance modeling, systems management, etc.

As always, the aim of the 13th Cloud Control Workshop is to foster multidisciplinary research in cloud management, leveraging expertise in areas such as autonomic computing, control theory, distributed systems, energy management, machine learning, mathematical statistics, performance modeling, systems management, etc.

By providing an understanding of the research challenges ahead and by fostering multi-disciplinary research collaborations. The ambition is to shape the future of resource management for any type of resources important for the cloud, from single servers to mega-scale datecente rs, including, e.g., mobile edge clouds, fog infrastructure, and disaggregated and software-definied infrastructures on any scale, from rack-scale to datacenter-scale. The workshop targets leading researchers from any scientific discipline with potential to contribute to this multidisciplinary topic.

rs, including, e.g., mobile edge clouds, fog infrastructure, and disaggregated and software-definied infrastructures on any scale, from rack-scale to datacenter-scale. The workshop targets leading researchers from any scientific discipline with potential to contribute to this multidisciplinary topic.

The ambition

The ambition is to create the type of event where people come, not to publish and extend their CV:s, but to discuss and learn, and most importantly, to establish or strengthen connections to interesting people with varying backgrounds, but all highly relevant to solving our problems in cloud control. To facilitate this we, in addition to a very discussion-oriented program, host the event on a small, relaxing, and inspiring location, with many opportunities to interact during the three days of the workshop.

Workshop format

The workshop format will be to mainly focus on multi-disciplinary research discussions in parallel sessions but also include some keynotes and some short scientific presentations on planned, ongoing, or completed research. Participants are encouraged to propose discussion topics beforehand and to take active part in any discussions with the ambition to make progress on any topic of i nterest to the participants. The 13th edition will strive for a strong focus on cloud management using the techniques listed above, rather than on cloud in general.

nterest to the participants. The 13th edition will strive for a strong focus on cloud management using the techniques listed above, rather than on cloud in general.

Program

The workshop program includes 4 keynotes, a 20 short presentations, 20 long discussion sessions and a poster session, for details see the Program. As an important part of the meeting is the opportunity to have informal discussions in a nice atmosphere, we will ensure to provide substantial time for that with all participant being at the same place with not many other people around.

Participants

For the 13th Cloud Control Workshop, we aim at a participation similar to the 11th edition, where there were 82 registered participants including 57 academic researchers and 25 industrial researchers and technology leaders from companies such as Ericsson, Intel, Google, Red Hat, Twitter, Tieto, SAAB, and others. The participants, of which most were rather senior, represented organizations in 16 countries, with Sweden being dominating before (in order) The United States, Ireland, Italy, France, Germany, Norway, Israel, The Netherlands, Switzerland, and more. To ensure the continued development and renewal of the workshop series, we ensured that a majority of the participants attended for the first time.

Participation is by invitation only. If interested to participate, please send an email to Erik Elmroth (elmroth@cs.umu.se). Note, however, that the number of participants is limited. Early confirmed participants include the following:

Keynote Speakers

Prashant Shenoy, Professor and Associate Dean, University of Massachusetts Amherst, MA, USA

Designing Systems and Applications for Transient Computing

Traditional distributed systems are built under the assumption that system resources will be available for use by applications unless there is a failure. Transient computing is a new phenomena that challenges this assumption by allowing system resources to become unavailable at any time. Transiency arises in many domains such as cloud computing–in the form of revocable spot servers–and in data centers that rely on variable electricity prices or intermittent renewable sources of energy Transiency is inherently different from fault tolerance since resources do not fail, rather they become temporarily unavailable, and traditional fault tolerance mechanisms are not suitable for handling transient resource unavailability.

In this talk, I will discuss how systems and applications need to be rethought to run on transient computing systems. I will first describe a system called Yank that uses a new bounded-time virtual machine migration mechanism to handle transiency at a system level while being transparent to applications. I will then discuss how modern distributed applications can be made transiency-aware and present a Spark-variant called Flint that we have developed to exploit transient cloud computing. I will end with open research questions in this area and directions for future work.

Speaker Biography

Prashant Shenoy is currently a Professor and Associate Dean in the College of Information and Computer Sciences at the University of Massachusetts Amherst. He received the B.Tech degree in Computer Science and Engineering from the Indian Institute of Technology, Bombay and the M.S and Ph.D degrees in Computer Science from the University of Texas, Austin. His research interests lie in distributed systems and networking, with a recent emphasis on cloud and green computing. He has been the recipient of several best paper awards at leading conferences, including a Sigmetrics Test of Time Award. He serves on editorial boards of the several journals and has served as the program chair of over a dozen ACM and IEEE conferences. He is a fellow of the IEEE and the AAAS and a distinguished member of the ACM.

Tarek Abdelzaher, Professor and Willett Faculty Scholar, University of Illinois at Urbana Champaign, IL, USA

Deep-Learning-as-a-Service for IoT Systems

The Internet of Things (IoT) heralds the emergence of multitudes of computing-enabled networked everyday devices with sensing capabilities in homes, cars, workplaces, and on our persons, leading to ubiquitous smarter environments and smarter cyber-physical “things”. The next natural step in this computing evolution is to develop the infrastructure needed for these computational things to collectively learn. Advances in deep learning offer remarkable results but require significant computing resources. This leads to the idea of “Deep-Learning as a Service”. The talk will describe back-end services that aim to bring advantages of deep learning to the emerging world of embedded IoT devices. The output of these services are trained neural networks. We discuss several core challenges in training such neural networks for subsequent use in IoT systems. Specifically, while the training process may be resource-intensive, the resulting trained network must be optimized for execution on a resource-limited device while at the same time offering accuracy guarantees. Evaluation results and experiences presented offer encouraging evidence of viability of Deep Learning as a Service for IoT.

Speaker Biography

Tarek Abdelzaher received his Ph.D. in Computer Science from the University of Michigan in 1999. He is currently a Professor and Willett Faculty Scholar at the Department of Computer Science, the University of Illinois at Urbana Champaign. He has authored/coauthored more than 200 refereed publications in real-time computing, distributed systems, networking, and control. He is an Editor-in-Chief of the Journal of Real-Time Systems, and has served as Associate Editor of the IEEE Transactions on Mobile Computing, IEEE Transactions on Parallel and Distributed Systems, IEEE Embedded Systems Letters, the ACM Transaction on Sensor Networks, and the Ad Hoc Networks Journal. He chaired (as Program or General Chair) several conferences in his area including RTAS, RTSS, IPSN, Sensys, DCoSS, ICDCS, and ICAC. Abdelzaher’s research interests lie broadly in understanding and influencing properties of networked embedded, social and software systems in the face of increasing complexity, distribution, and degree of interaction with an external physical and social environment. Tarek Abdelzaher is a recipient of the IEEE Outstanding Technical Achievement and Leadership Award in Real-time Systems (2012), the Xerox Award for Faculty Research (2011), as well as several best paper awards. He is a member of IEEE and ACM.

John Wilkes, Principal Software Engineer, Google, Mountain View, CA, USA

Building the Warehouse Scale Computer

Imagine some product team inside Google wants 100,000 CPU cores + RAM + flash + accelerators + disk in a couple of months. We need to decide where to put them, when; whether to deploy new machines, or re-purpose/reconfigure old ones; ensure we have enough power, cooling, networking, physical racks, data centers and (over longer a time-frame) wind power; cope with variances in delivery times from supply logistics hiccups; do multi-year cost-optimal placement+decisions in the face of literally thousands of different machine configurations; keep track of parts; schedule repairs, upgrades, and installations; and generally make all this happen behind the scenes at minimum cost.

And then after breakfast, we get to dynamically allocate resources (on the small-minutes timescale) to the product groups that need them most urgently, accurately reflecting the cost (opex/capex) of all the machines and infrastructure we just deployed, and monitoring and controlling the datacenter power and cooling systems to achieve minimum overheads – even as we replace all of these on the fly.

This talk will highlight some of the exciting problems we’re working on inside Google to ensure we can supply the needs of an organization that is experiencing (literally) exponential growth in computing capacity.

Speaker Biography

John Wilkes has been at Google since 2008, where he is working on automation for building warehouse scale computers. Before this, he worked on cluster management for Google’s compute infrastructure (Borg, Omega, Kubernetes). He is interested in far too many aspects of distributed systems, but a recurring theme has been technologies that allow systems to manage themselves.

He received a PhD in computer science from the University of Cambridge, joined HP Labs in 1982, and was elected an HP Fellow and an ACM Fellow in 2002 for his work on storage system design. Along the way, he’s been program committee chair for SOSP, FAST, EuroSys and HotCloud, and has served on the steering committees for EuroSys, FAST, SoCC and HotCloud. He’s listed as an inventor on 50+ US patents, and has an adjunct faculty appointment at Carnegie-Mellon University. In his spare time he continues, stubbornly, trying to learn how to blow glass.

Ling Liu, Professor, Georgia Institute of Technology, Atlanta, GA, USA

Shared Memory and Disaggregated Memory in Virtualized Clouds

Cloud applications are typically deployed using the application deployment models, comprised of virtual machines (VMs) and/or containers. These applications enjoy high throughput and low latency if they are served entirely from main memory. However, when these applications cannot fit their working sets in real memory of their VMs or containers, they suffer severe performance loss due to excess memory paging and thrashing. Even when unused host memory or unused remote memory are present in other VMs on the same host or across the cluster, these applications are unable to benefit from those idle host/remote memory. In this keynote, I will first revisit and examine the problem of memory imbalance and temporal usage variations in virtualized Clouds and discuss the potential benefits of dynamically managing and sharing unused host memory and unused remote memory. Then I will describe some systems solutions for exploiting shared memory and disaggregated memory transparently, opportunistically, and non-intrusively. and present our initial results for efficient sharing of host and remote memory. The talk will conclude with a discussion on integrating shared memory and disaggregated memory management as an integral part of Cloud control for hosting big data and machine learning workloads in Cloud datacenters.

Speaker Biography

Prof. Dr. Ling Liu is a Professor in the School of Computer Science at Georgia Institute of Technology. She directs the research programs in Distributed Data Intensive Systems Lab (DiSL), examining various aspects of large-scale data intensive systems. Prof. Liu is an internationally recognized expert in the areas of Database Systems, Distributed Computing, Internet and Web computing, and Service Oriented Computing. Prof. Liu has published over 300 international journal and conference articles, and is a recipient of the best paper award from a number of top venues, including ICDCS 2003, WWW 2004, 2005 Pat Goldberg Memorial Best Paper Award, IEEE CLOUD 2012, IEEE ICWS 2013, ACM/IEEE CCGrid 2015, IEEE Edge 2017. Prof. Liu is an elected IEEE Fellow and a recipient of IEEE Computer Society Technical Achievement Award. Prof. Liu has served as general chair and PC chairs of numerous IEEE and ACM conferences in the fields of big data, cloud computing, data engineering, distributed computing, very large databases, and served as the editor in chief of IEEE Transactions on Services Computing from 2013-2016. Prof. Liu’s research is primarily sponsored by NSF, IBM and Intel.

Workshop Details

Material

Travel

The venue is located 35 minutes drive from Arlanda airport and there will be a chartered bus before the start and after the end of the workshop. Preliminary times for local transport are:

- Bus leaves Arlanda Airport at 9.00 on June 13 (from Terminal 4’s bus stops). Gathering immediately outside the exit of Terminal 4 at 8.45.

- Bus arrives Arlanda Airport (Terminal 4) no later than 16.30 on June 15.

Registration fee

The workshop registration fee covers not only participation but also hotel room for two nights from June 13 to June 15, all meals and coffees during the workshop, some drinks for dinners, as well as bus transportation from Arlanda airport to the workshop venue and back.

- Total workshop fee for 3 days and 2 nights in single room: 5200 SEK + tax (6095 SEK incl tax), incl. all meals, coffees, some dinner drinks, transportation, etc.

To register please use the link provided in your invitation email. Since participation is by invitation only, the link for registration is provided only in your invitation email.

Although we expect the discussions last into the bright summer nights, we recommend also outdoor activities as various kinds of games and sport, simply enjoying the nature, going for a run or for a swim in the Sea, trying out the canoes, or enjoying the sauna, relax, and outdoor jacuzzi.

Program Committee

Erik Elmroth, Umeå University (Chair)

Ahmed Ali-ElDin, University of Massachusetts, Amherst

Olumuyiwa Ibidunmoye, Umeå University

Mina Sedaghat, Ericsson Research

Sponsors

Financial support for the workshop has been received by eSSENCE – The e-science collaboration.

As always, the aim of the 13th Cloud Control Workshop is to foster multidisciplinary research in cloud management, leveraging expertise in areas such as autonomic computing, control theory, distributed systems, energy management, machine learning, mathematical statistics, performance modeling, systems management, etc.

As always, the aim of the 13th Cloud Control Workshop is to foster multidisciplinary research in cloud management, leveraging expertise in areas such as autonomic computing, control theory, distributed systems, energy management, machine learning, mathematical statistics, performance modeling, systems management, etc. rs, including, e.g., mobile edge clouds, fog infrastructure, and disaggregated and software-definied infrastructures on any scale, from rack-scale to datacenter-scale. The workshop targets leading researchers from any scientific discipline with potential to contribute to this multidisciplinary topic.

rs, including, e.g., mobile edge clouds, fog infrastructure, and disaggregated and software-definied infrastructures on any scale, from rack-scale to datacenter-scale. The workshop targets leading researchers from any scientific discipline with potential to contribute to this multidisciplinary topic. nterest to the participants. The 13th edition will strive for a strong focus on cloud management using the techniques listed above, rather than on cloud in general.

nterest to the participants. The 13th edition will strive for a strong focus on cloud management using the techniques listed above, rather than on cloud in general.